Any parent who has tried to comfort a child with an ear infection will appreciate Fan-Gang Zeng’s prediction.

“With a smartphone, you’ll be able to take a picture of the child’s ear and send it to the doctor or a website,” says the University of California, Irvine (UCI) professor of otolaryngology, biomedical engineering, cognitive sciences, and anatomy & neurobiology, who also directs the campus’s Center for Hearing Research. “You won’t need to take the child to the emergency room, which is not a pleasant experience for them.”

Zeng believes “this will become the norm in the next few years,” thanks to artificial intelligence. With just that smartphone photo, an AI “agent” will be able to determine if the child’s ear infection will go away in a few days, if antibiotics are necessary or if a follow-up appointment should be scheduled.

Indeed, AI has the potential to revolutionize hearing care, based on the research Zeng and his colleagues from Duke University, the University of Washington and University College London’s Ear Institute detail in an article published in the journal Nature Machine Intelligence.

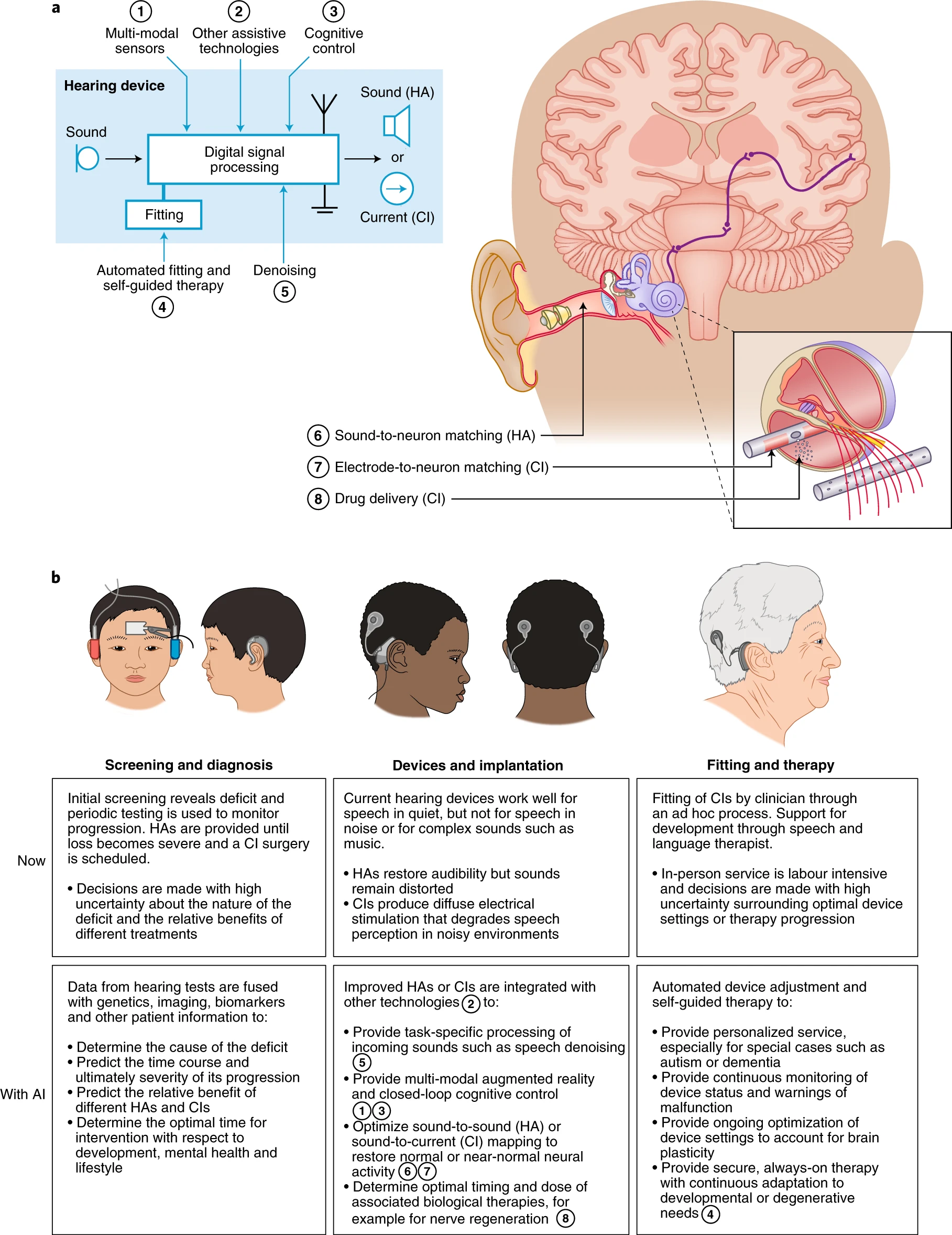

“Hearing loss is a huge problem,” says Zeng, the paper’s co-corresponding author, who points to World Health Organization projections that 1 in 4 individuals will have it by 2050. That translates to nearly 2.5 billion people with hearing deficits, 700 million of them requiring rehabilitation.

The numbers will rise because people are living longer, the pool of older folks is growing larger and our living environments are getting noisier, says Zeng, who adds that drug use – including the daily consumption of aspirin – can contribute to hearing problems.

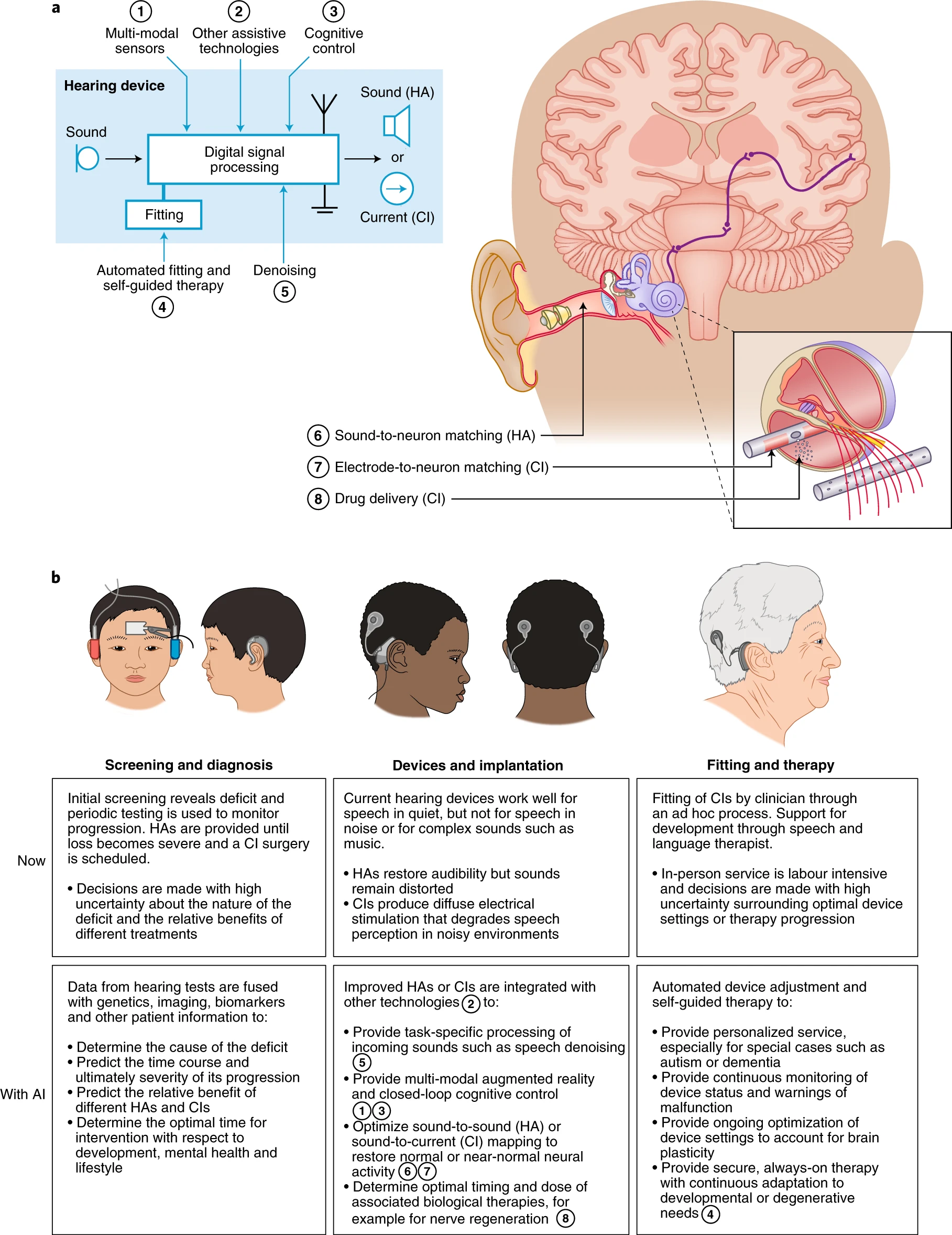

Speaking of drugs, there are not currently any that cure hearing loss. Treatments range from removing built-up ear wax to letting a minor infection pass to prescribing antibiotics, hearing aids or cochlear implants, which restore hearing through direct electrical stimulation of the auditory nerve. Zeng says that AI can determine the best treatment option and even adjustments for the fit and function of hearing aids and implants.

“Fan-Gang Zeng and his colleagues have taken on the herculean task of delineating how artificial intelligence can help address nearly every specific hearing loss-related challenge in the clinic,” says Uri Manor, assistant research professor at the Salk Institute for Biological Studies and director of the Waitt Advanced Biophotonics Core Facility. “Not long ago, some of their suggestions may have seemed more appropriate for a science fiction novel, but today they are all entirely feasible, largely thanks to the synergistic combination of ever-improving sensor technology, big data and, of course, deep learning-based approaches.”

The most serious hearing issues occur when allergies, bacteria or a virus cause fluid to enter the middle ear. Zeng says that middle ear infection is the No. 1 reason for children to visit a hospital, but fortunately, the infection will go away for most of those patients. By informing parents via telehealth that their child has the sort of infection that will soon resolve on its own, AI can greatly reduce the number of children rushed to emergency rooms.

This is especially critical in these days of overrun ERs due to COVID-19, but even more promising is that the AI diagnosis process can be duplicated affordably worldwide, including in places where inhabitants have little or no access to quality healthcare.

“You need hardware, software and technology, including AI, but luckily, cellphones have become so pervasive,” Zeng says. “Everybody – whether in the north or the south, rich or poor places – has access to cellphones. With a smartphone, they can connect to a network. I think that removes most, if not all, barriers to access.”

He is now researching how AI could transform the treatment of tinnitus, or ringing in the ears, for which there also is no cure. Coupled with advances in diagnosing hearing loss, that would put medical practitioners around the globe well on the way to lowering costs and improving outcomes in hearing care.

“AI may not solve all the problems,” Zeng says, “but what if you could solve a majority of problems? Let machines do the routine parts for doctors to free up time so they can work on the tough cases.”

Manor has a personal connection to Zeng’s research.

“As a hearing-impaired person who also uses deep learning for their research,” he says, “I could not be more ecstatic about what the future holds for us, and this manuscript does a brilliant job of articulating that landscape.”